Imagine a boardroom scenario. The CTO just pitched an AI project that could save millions. Everyone's excited.

Then someone asks: "But do we have the infrastructure for this?"

The room goes quiet. Heads turn. The excitement fades fast.

Most companies jump into AI, thinking cloud credits and a few GPUs will cut it. Six months later, they're stuck with models that won't scale, data pipelines that break constantly.

McKinsey says, nearly two-thirds of organizations are still in the experimentation or piloting stage, with only a minority having truly scaled AI across the enterprise - underscoring how infrastructure readiness is lagging behind AI ambition.

Enterprise AI infrastructure moves above servers and storage. It's the foundation determining whether AI initiatives deliver value or drain budgets. Understanding what AI infrastructure actually means separates companies that succeed from those still running pilots two years later.

Current enterprise systems weren't designed for AI workflows. Standard infrastructure handles predictable workloads like monthly reports, customer databases, and email systems.

AI operates on a different level entirely. Training models requires orchestrating resources that scale dynamically. Real-time inference needs millisecond response times. Data pipelines process terabytes daily across distributed systems.

Storage systems choke on unstructured data volumes. Networks bottleneck during distributed training. Computing resources remain idle or max out with no middle ground. Security protocols can't handle the new data flow patterns.

The solution requires rethinking infrastructure foundations completely.

A 2025 MIT-backed study found that up to 95% of generative AI initiatives fail to deliver measurable business impact, not because the models don’t work, but because existing infrastructure and integration layers can’t support AI at scale.

Specialized processing capabilities are necessary. But infrastructure problems require more than just raw computing power.

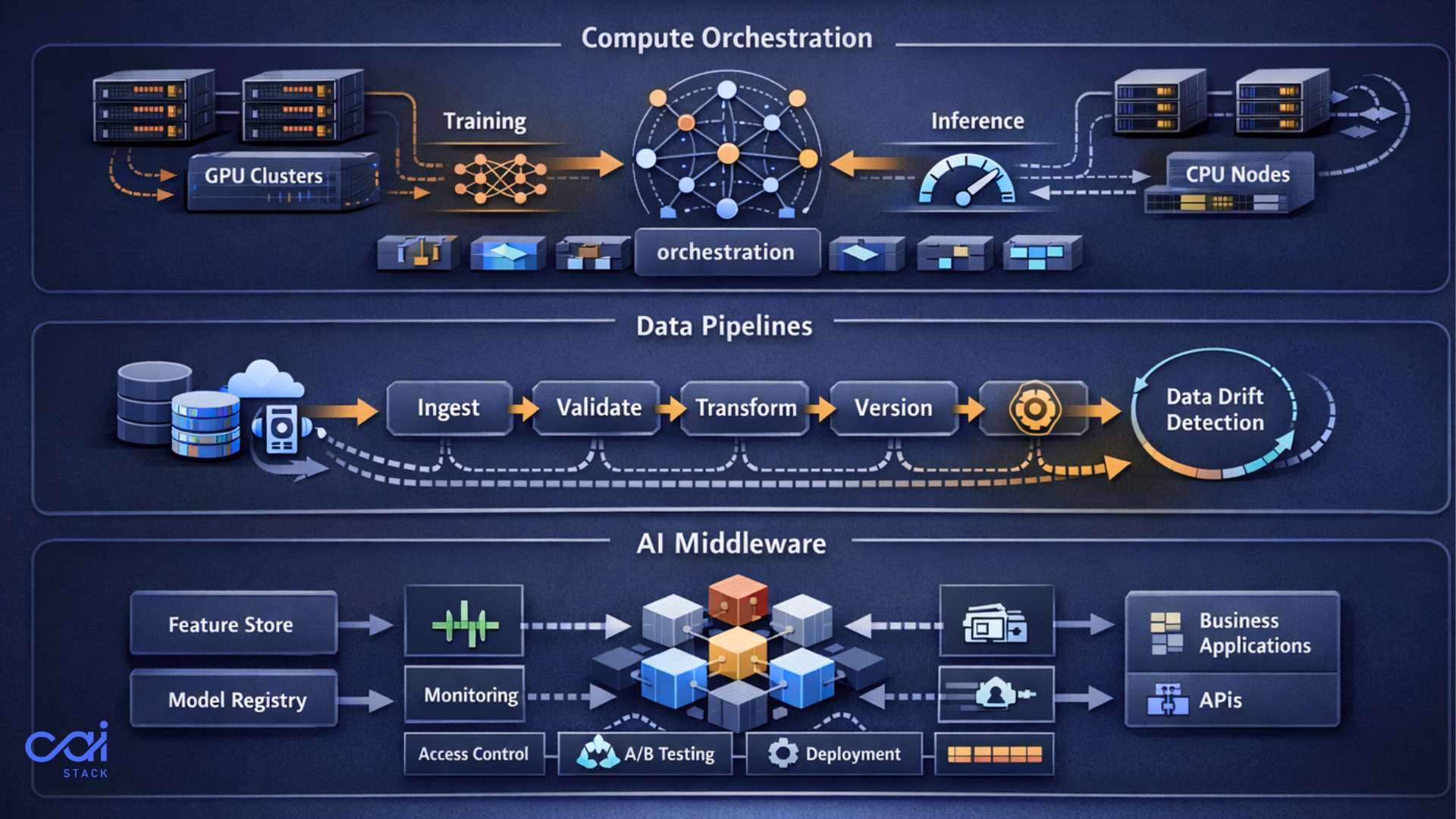

AI and machine learning infrastructure requires orchestration. Compute resources need to scale dynamically based on workload demands. Training runs use different resources than inference. Batch processing needs different setups than real-time predictions.

Support for multiple frameworks matters too. Today it's PyTorch. Tomorrow, teams want TensorFlow. Next month, someone discovers a better tool.

Companies getting this right spin up new AI projects in days. They're not waiting for procurement. They're not fighting over limited resources.

Nobody gets excited about data pipelines. But they make or break AI initiatives.

Models are only as good as the data feeding them. Enterprise data lives everywhere. Legacy databases from the 90s. Cloud storage across three providers. SaaS applications that don't talk to each other. On-premises file servers nobody wants to touch. IoT devices generate streams of unstructured data.

Building solid AI infrastructure architecture means creating pathways that move, clean, and prepare this data automatically. The best systems handle this through automated workflows. Data gets ingested, validated, transformed, and versioned without manual intervention.

Version control matters too. Models trained on Q3 data need to be reproducible in Q4. Data drift detection catches when incoming data no longer matches training distributions.

This is where most AI projects die quietly.

AI middleware works between models and business systems. Model serving infrastructure handles deployment, versioning, and rollback. Models can't just get thrown onto a server. Canary deployments, A/B testing, and instant rollback are essential.

MCP (Model Context Protocol) layers control access, monitor usage, and enforce rate limits. When 50 different applications hit AI endpoints, traffic management prevents cascading failures. MCP creates standardized connections between AI systems and enterprise applications, making integration seamless across different platforms.

Feature stores centralize data preparation. Instead of every data scientist building their own pipelines, features get computed once and reused everywhere. This eliminates inconsistencies between training and production. It cuts data processing costs by 60-70%.

Model registries track every version of every model. Who built it? What data was used? How did it perform in testing? When was it deployed? All logged automatically.

Looking to build a solid foundation without the guesswork? CAI Stack brings together compute, data, and middleware layers into a unified platform. See how it works for your use case.

AI and machine learning infrastructure middleware technologies don't get built overnight. But waiting three years isn't necessary either.

Start with the pain points hurting operations right now. Map what actually exists. Where does data live and how does it move? What compute resources exist and who controls them? Which teams are already running AI experiments and how?

This audit reveals the gaps. It also shows hidden assets available to leverage.

Pick a single AI use case that matters to the business. Build a complete infrastructure for just that one project. End-to-end. Data ingestion through model deployment. This becomes the template. The proof of concept. The reference architecture.

Automate from day one. Manual processes don't scale. Automate model training pipelines. Automate testing. Automate deployment. Automate monitoring. Every manual step is a bottleneck waiting to slow things down.

The teams moving fastest have continuous integration and deployment for their models. Code commits trigger automatic testing and deployment.

Plan for governance early. Regulations keep tightening. Data privacy laws multiply. Industry standards evolve. Build governance into the foundation from the start. Data lineage tracking shows where every byte originated. Access controls follow least-privilege principles. Audit logs capture every action on every model.

Adding governance later means rebuilding everything.

Teams go from idea to production in weeks instead of quarters. Data scientists spend time on models, not fighting infrastructure. Business units get AI capabilities when they need them.

One financial services company cut its model deployment time from 6 months to 3 weeks. Same models. Same data scientists. Better enterprise AI infrastructure.

The right setup reduces waste everywhere. Compute resources get used efficiently. Data processing doesn't run redundantly. Failed experiments fail fast and cheap.

A retail company reduced its cloud costs 40% after consolidating its infrastructure. They're running more models on less budget.

Security vulnerabilities shrink when infrastructure follows consistent patterns. Compliance becomes auditable. Model failures get caught in testing, not production.

Organizations with mature AI infrastructure move faster than competitors. They experiment more. They fail faster. They learn quicker. They scale successes immediately.

While competitors are still debating infrastructure choices, these companies are launching new AI-powered products. That gap compounds over time.

Developer productivity increases 3-5x when infrastructure handles the repetitive work. Data scientists spend 80% of their time on data preparation today. Cut that to 30%, and they deliver more models.

Time-to-market shrinks from quarters to weeks. Each month, faster to market has real revenue implications. For a product generating $10M annual revenue, launching three months earlier is worth $2.5M.

Infrastructure costs decrease 30-50% through better resource utilization. Auto-scaling prevents over-provisioning. Workload optimization reduces waste.

Risk reduction is harder to quantify but easier to understand. One data breach costs millions. One compliance violation shuts down operations. One biased model destroys brand reputation.

Revenue opportunities emerge when AI infrastructure architecture enables new capabilities. Personalization drives conversion. Predictive maintenance prevents downtime. Fraud detection saves millions.

The foundation determines whether AI delivers business value or burns budget. Thoughtful architecture that aligns with business goals is necessary.

Start with the biggest pain point. Build one complete solution. Learn from that. Expand deliberately.

Compute, data, and middleware form the essential pillars. All three need equal attention. Automation eliminates bottlenecks and reduces errors.

Ready to build infrastructure that scales with AI ambitions? CAI Stack eliminates the complexity of managing compute, data, and middleware layers separately. Our solution gives organizations the foundation to move from proof-of-concept to production without the usual headaches. Schedule a conversation with our team to explore what's possible.

AI infrastructure doesn't have to be a bottleneck. Make it the competitive advantage it should be.

Subscribe to get the latest updates and trends in AI, automation, and intelligent solutions — directly in your inbox.

Explore our latest blogs for insightful and latest AI trends, industry insights and expert opinions.

Empower your AI journey with our expert consultants, tailored strategies, and innovative solutions.