Traditional FinOps was built for predictable workloads. AI is anything but predictable.

Model training can run for hours or weeks. Inference costs change with user behavior. Experiments share infrastructure across teams and timeframes. Without architecture that understands AI workloads, enterprises lose control of spend long before they lose control of performance.

That’s why enterprise FinOps needs an AI-oriented architecture.

Most enterprises are managing AI spending with tools built for the last decade's infrastructure.

It doesn't work.

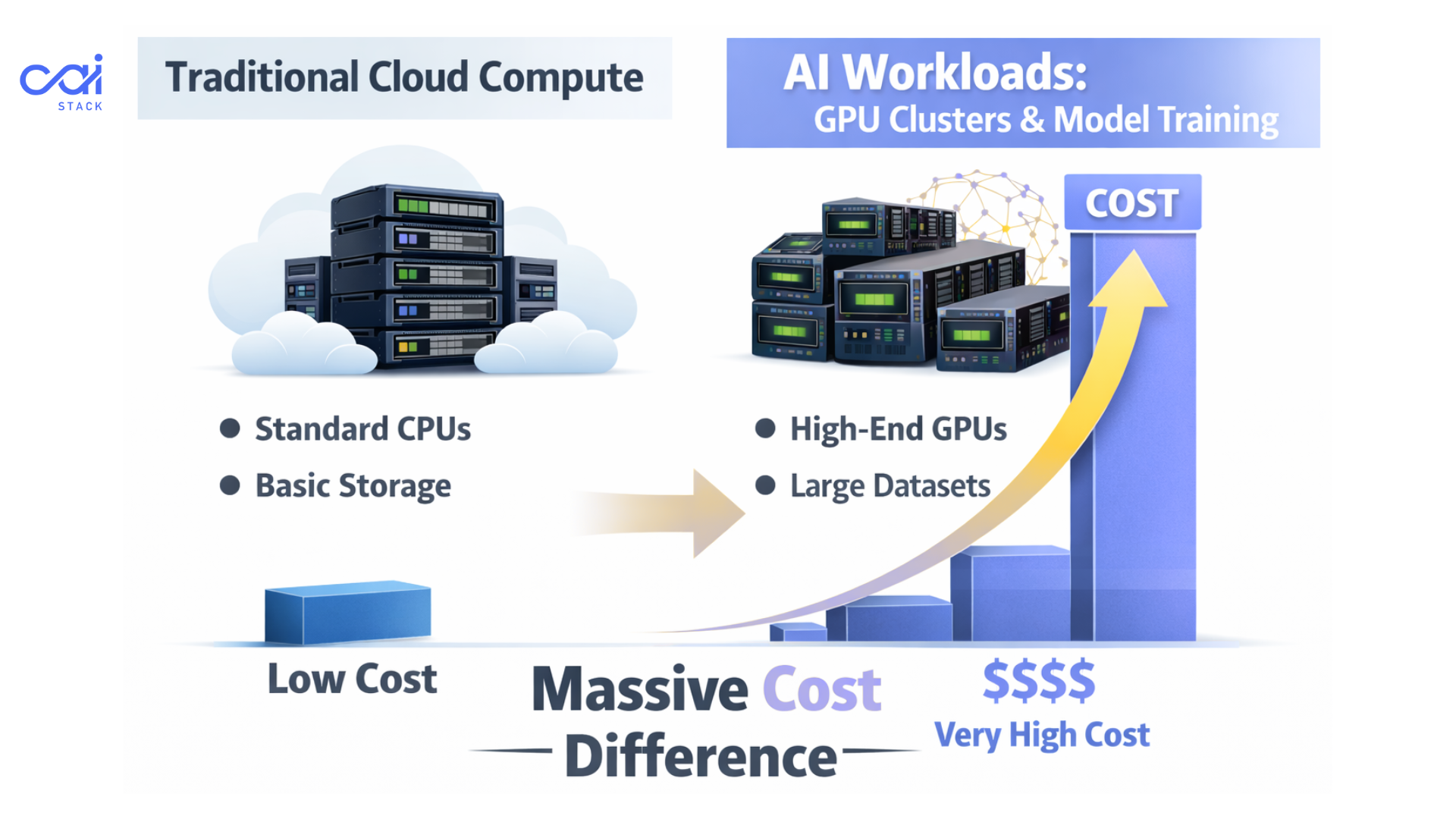

Traditional cloud cost optimization focuses on right-sizing instances, shutting down idle resources, and negotiating better rates. That worked when web servers and databases were your highest costs.

But AI in FinOps introduces different challenges:

Your existing dashboards show costs. They don't show the why behind AI spending.

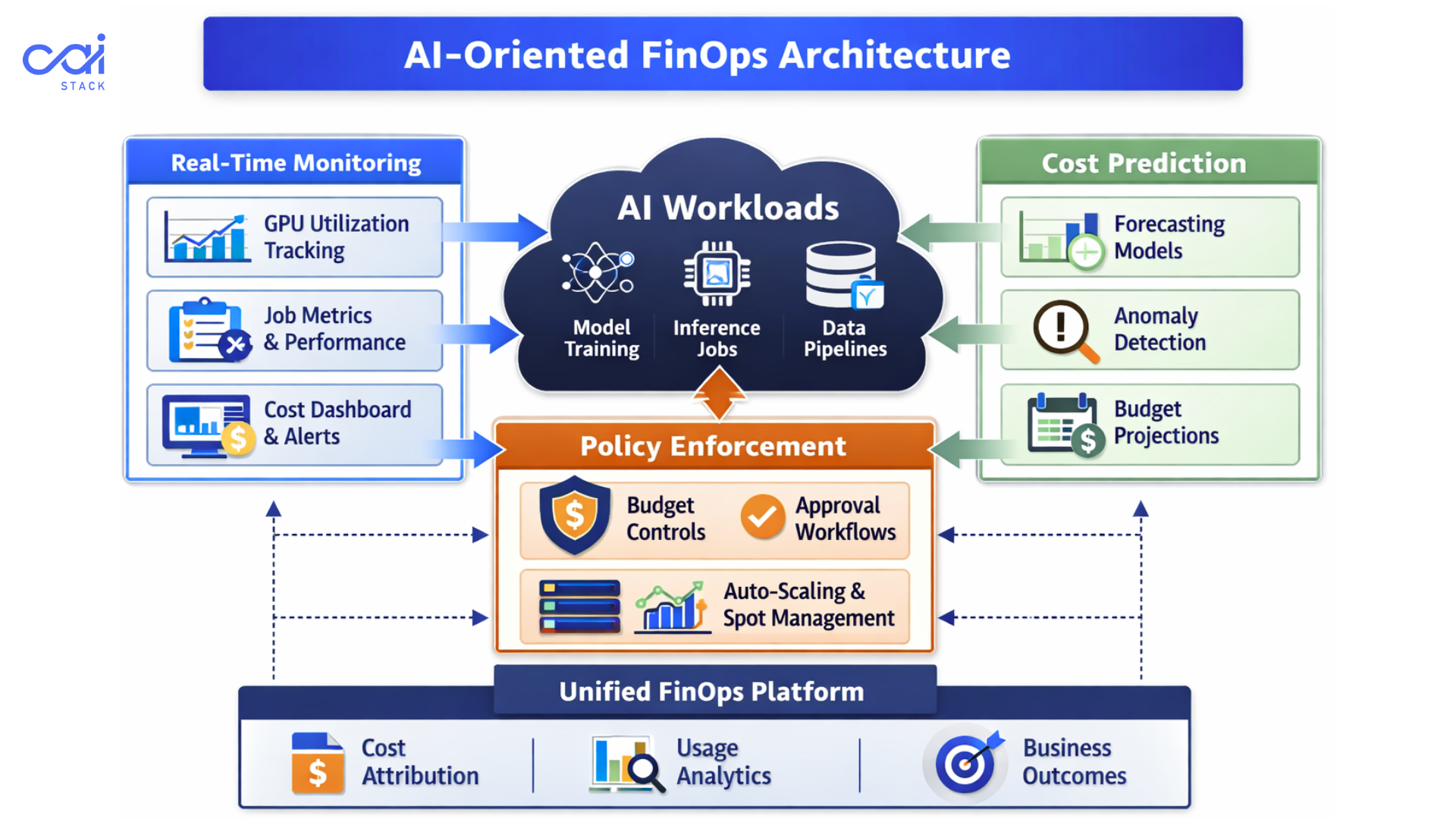

AI in FinOps refers to applying AI-aware systems and automation to manage the cost, governance, and efficiency of AI workloads. Unlike traditional FinOps, which focuses on static cloud resources, AI for FinOps is designed to handle GPU-driven training, unpredictable inference usage, and constant experimentation.

In practice, AI in FinOps gives enterprises real-time visibility into model training and inference costs, enforces spending guardrails before overruns happen, and connects AI infrastructure spend directly to business outcomes. This shift enables teams to optimize GPU utilization, reduce waste, and scale AI initiatives without losing financial control.

Training a large language model might take 3 hours or 30 days. Inference costs fluctuate based on user queries and model size.

Here's what makes FinOps for AI fundamentally different:

You need architecture that understands AI workloads from the ground up.

The FinOps Foundation notes that AI workloads require a fundamentally different cost governance approach than traditional cloud spend, as GPU pricing, model training cycles, and inference usage patterns do not behave like legacy compute resources.

An AI-oriented architecture isn't just about tracking costs. It's about understanding the relationship between spending and outcomes.

You need visibility into every AI job as it runs. Not after the fact. Right now.

Manual optimization doesn't scale when you're running hundreds of AI jobs per week.

Looking to implement these capabilities? CAI Stack provides a unified system for AI in FinOps, giving you visibility and control without the engineering overhead.

Tagging resources isn't enough when multiple teams contribute to one AI project.

Your monitoring needs to capture cost per GPU hour, model training time, inference latency, data transfer costs, and idle resource time.

All in one place. If cost data lives in one system and performance metrics in another, you'll never see the full picture.

Budget controls that prevent overspend before it happens. If a team has $50K left quarterly, they can't launch a $60K job. The system blocks it automatically.

Resource reservation management. High-priority projects get guaranteed GPU access during business hours. Lower-priority work runs on spot instances during off-peak times.

Forecast costs based on planned experiments. Based on similar projects, the system estimates the cost of new model training at between $75K and $95K. Finance can plan accordingly.

Identify cost anomalies before they spiral. A training job normally costing $5K is on track for $15K. The system flags it before you've blown your budget.

Track these metrics:

Enterprises implementing AI-oriented FinOps architectures see:

According to CIO, enterprises adopting AI at scale are increasingly turning to FinOps practices to regain visibility and control over rapidly escalating AI and GPU-driven cloud costs.

30-50% reduction in AI infrastructure costs within six months. Not from cutting AI initiatives, but from eliminating waste.

3-4x faster experiment iteration. Data scientists ship models faster when they can quickly spin up resources.

Better alignment between AI and business strategy. Leadership makes informed decisions with clear visibility into costs and outcomes.

The cost of not implementing this? You're already paying it. Every day without proper cloud cost optimization for AI, you're overspending on infrastructure or underdelivering on projects.

Why enterprise FinOps needs an AI-oriented architecture isn't theoretical. It's an urgent business requirement.

Your AI initiatives are too expensive to manage with tools built for a different era. GPU costs, unpredictable workloads, and complex attribution require purpose-built solutions.

Start with visibility. Add guardrails. Enable optimization. Scale intelligence.

Ready to take control? CAI Stack offers a complete platform for cloud cost optimization built specifically for AI workloads, giving you real-time visibility, automated optimization, and predictive analytics in one unified solution. See how enterprises are cutting AI costs by 40% while accelerating model deployment.

Subscribe to get the latest updates and trends in AI, automation, and intelligent solutions — directly in your inbox.

Explore our latest blogs for insightful and latest AI trends, industry insights and expert opinions.

Empower your AI journey with our expert consultants, tailored strategies, and innovative solutions.